Apriori algorithm是关联规则里一项基本算法。是由Rakesh Agrawal和Ramakrishnan Srikant两位在1994年提出的布尔关联规则的频繁项集挖掘算法(详情:Fast Algorithms for Mining Association Rules)。算法的名字是因为算法基于先验知识(prior knowledge).根据前一次找到的频繁项来生成本次的频繁项。关联规则的目的在于在一个数据集中找出项之间的关系,也称之为购物蓝分析 (market basket analysis)。例如,购买佳能的顾客,有70%的可能也会买在一个月之类买HP打印机。这其中最有名的例子就是”尿布和啤酒“的故事了。

几个概念:

关联规则A->B的支持度support=P(AB),指的是事件A和事件B同时发生的概率。置信度confidence=P(B|A)=P(AB)/P(A),指的是发生事件A的基础上发生事件B的概率。比如说在规则Computer => antivirus_software , 其中 support=2%, confidence=60%中,就表示的意思是所有的商品交易中有2%的顾客同时买了电脑和杀毒软件,并且购买电脑的顾客中有60%也购买了杀毒软件。

如果事件A中包含k个元素,那么称这个事件A为k**项集,并且事件A满足最小支持度阈值的事件称为频繁k项集**。

Apriori算法的基本思想:

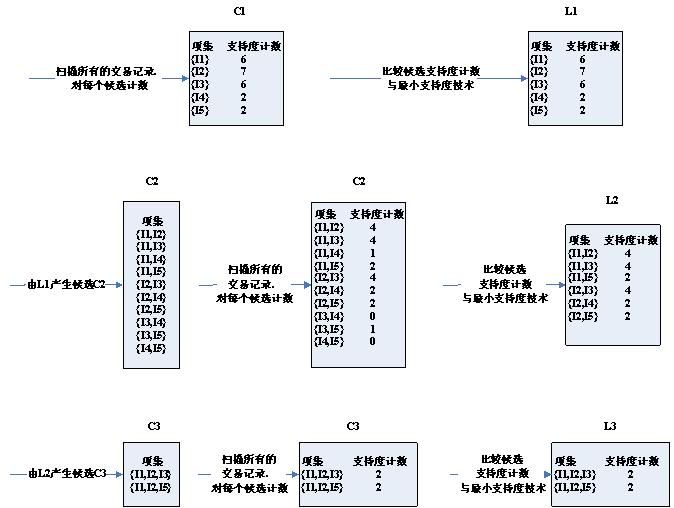

过程分为两个步骤:第一步通过迭代,检索出事务数据库中的所有频繁项集,即支持度不低于用户设定的阈值的项集;第二步利用频繁项集构造出满足用户最小信任度的规则。具体做法就是:首先找出频繁1-项集,记为L1;然后利用L1来产生候选项集C2,对C2中的项进行判定挖掘出L2,即频繁2-项集;不断如此循环下去直到无法发现更多的频繁k-项集为止。每挖掘一层Lk就需要扫描整个数据库一遍。算法利用了一个性质:Apriori 性质:任一频繁项集的所有非空子集也必须是频繁的。意思就是说,生成一个k-itemset的候选项时,如果这个候选项有子集不在(k-1)-itemset(已经确定是frequent的)中时,那么这个候选项就不用拿去和支持度判断了,直接删除。具体而言:

1) 连接步

为找出Lk(所有的频繁k项集的集合),通过将Lk-1(所有的频繁k-1项集的集合)与自身连接产生候选k项集的集合。候选集合记作Ck。设l1和l2是Lk-1中的成员。记li[j]表示li中的第j项。假设Apriori算法对事务或项集中的项按字典次序排序,即对于(k-1)项集li,li[1]<li[2]<……….<li[k-1]。将Lk-1与自身连接,如果(l1[1]=l2[1])&&( l1[2]=l2[2])&&……..&& (l1[k-2]=l2[k-2])&&(l1[k-1]<l2[k-1]),那认为l1和l2是可连接。连接l1和l2 产生的结果是{l1[1],l1[2],……,l1[k-1],l2[k-1]}。

2) 剪枝步

CK是LK的超集,也就是说,CK的成员可能是也可能不是频繁的。通过扫描所有的事务(交易),确定CK中每个候选的计数,判断是否小于最小支持度计数,如果不是,则认为该候选是频繁的。为了压缩Ck,可以利用Apriori性质:任一频繁项集的所有非空子集也必须是频繁的,反之,如果某个候选的非空子集不是频繁的,那么该候选肯定不是频繁的,从而可以将其从CK中删除。

算法伪代码如下:

//算法:Apriori |

举个例子,来源于书中(见参考文献1)的例子。

如图中所示,有9个事务,其算法流程如下:

以上就有了频繁项集,然后根据得到的频繁项集和给定置信度算关联规则。置信度其实是一个条件概率。关联规则产生就是根据每个生成的频繁项集,产生其所有非空子集,然后根据子集和原来的事务库中循环比较。大于给定重复次数的就是满足条件的。例如针对频繁集{I1,I2,I5}。可以产生哪些关联规则?该频繁集的非空真子集(求子集的具体方法在前面python中a+=b和a=a+b的问题中已经阐述)有{I1,I2},{I1,I5},{I2,I5},{I1 },{I2}和{I5},对应置信度如下:

I1&&I2->I5 confidence=2/4=50%

I1&&I5->I2 confidence=2/2=100%

I2&&I5->I1 confidence=2/2=100%

I1 ->I2&&I5 confidence=2/6=33%

I2 ->I1&&I5 confidence=2/7=29%

I5 ->I1&&I2 confidence=2/2=100%

如果min_conf=70%,则强规则有I1&&I5->I2,I2&&I5->I1,I5 ->I1&&I2。

具体而言:因为这几天在学python,所以就用python实现如下。里面有注释,刚开始用python,所以把一些问题也注释在里面了,代码可能不怎么清晰。仅供参考。

# coding=UTF-8 |

运行结果如下:

-----result-------- |

有网友指出,本页代码在其python环境运行结果不正确,因其环境用的是python2.x,而本人用的环境是python3.x,在python3.x中,两个整数相除是得到小数的,而python2.x里是整数,因此导致支持度计算不正确,只需要改正本页代码中的196行和256行将分子转换成浮点即可(例如分别改为t_supp = count*1.0/self.size,supp = count*1.0/self.size)。本人初学python,一些细节不清楚,望理解。感谢网友指出。

参考文献:

Jiawei Han and Micheline Kamber.Data Mining: Concepts and Techniques[M]. San Francisco: Morgan Kaufmann Publishers.2006:234-240